- 1. Installation Overview

- 2. Requirements

- 3. Installing the oVirt Engine

- 3.1. Installing the oVirt Engine Machine and the Remote Server

- 3.2. Enabling the oVirt Engine Repositories

- 3.3. Preparing a Remote PostgreSQL Database

- 3.4. Installing and Configuring the oVirt Engine

- 3.5. Installing and Configuring Data Warehouse on a Separate Machine

- 3.6. Connecting to the Administration Portal

- 4. Installing Hosts for oVirt

- 5. Preparing Storage for oVirt

- 6. Adding Storage for oVirt

- Appendix A: Configuring a Host for PCI Passthrough

- Appendix B: Removing the standalone oVirt Engine

- Appendix C: Preventing kernel modules from loading automatically

- Appendix D: Legal notice

Installing oVirt as a standalone Engine with remote databases

Standalone Engine installation is manual and customizable. You must install a Enterprise Linux machine, then run the configuration script (engine-setup) and provide information about how you want to configure the oVirt Engine. Add hosts and storage after the Engine is running. At least two hosts are required for virtual machine high availability.

To install the Engine with a remote Engine database, manually create the database on the remote machine before running engine-setup. To install the Data Warehouse database on a remote machine, run the Data Warehouse configuration script (ovirt-engine-dwh-setup) on the remote machine. This script installs the Data Warehouse service and can create the Data Warehouse database automatically.

See the Planning and Prerequisites Guide for information on environment options and recommended configuration.

oVirt Key Components

| Component Name | Description |

|---|---|

oVirt Engine |

A service that provides a graphical user interface and a REST API to manage the resources in the environment. The Engine is installed on a physical or virtual machine running Enterprise Linux. |

Hosts |

Enterprise Linux hosts (Enterprise Linux hosts) and oVirt Nodes (image-based hypervisors) are the two supported types of host. Hosts use Kernel-based Virtual Machine (KVM) technology and provide resources used to run virtual machines. |

Shared Storage |

A storage service is used to store the data associated with virtual machines. |

Data Warehouse |

A service that collects configuration information and statistical data from the Engine. |

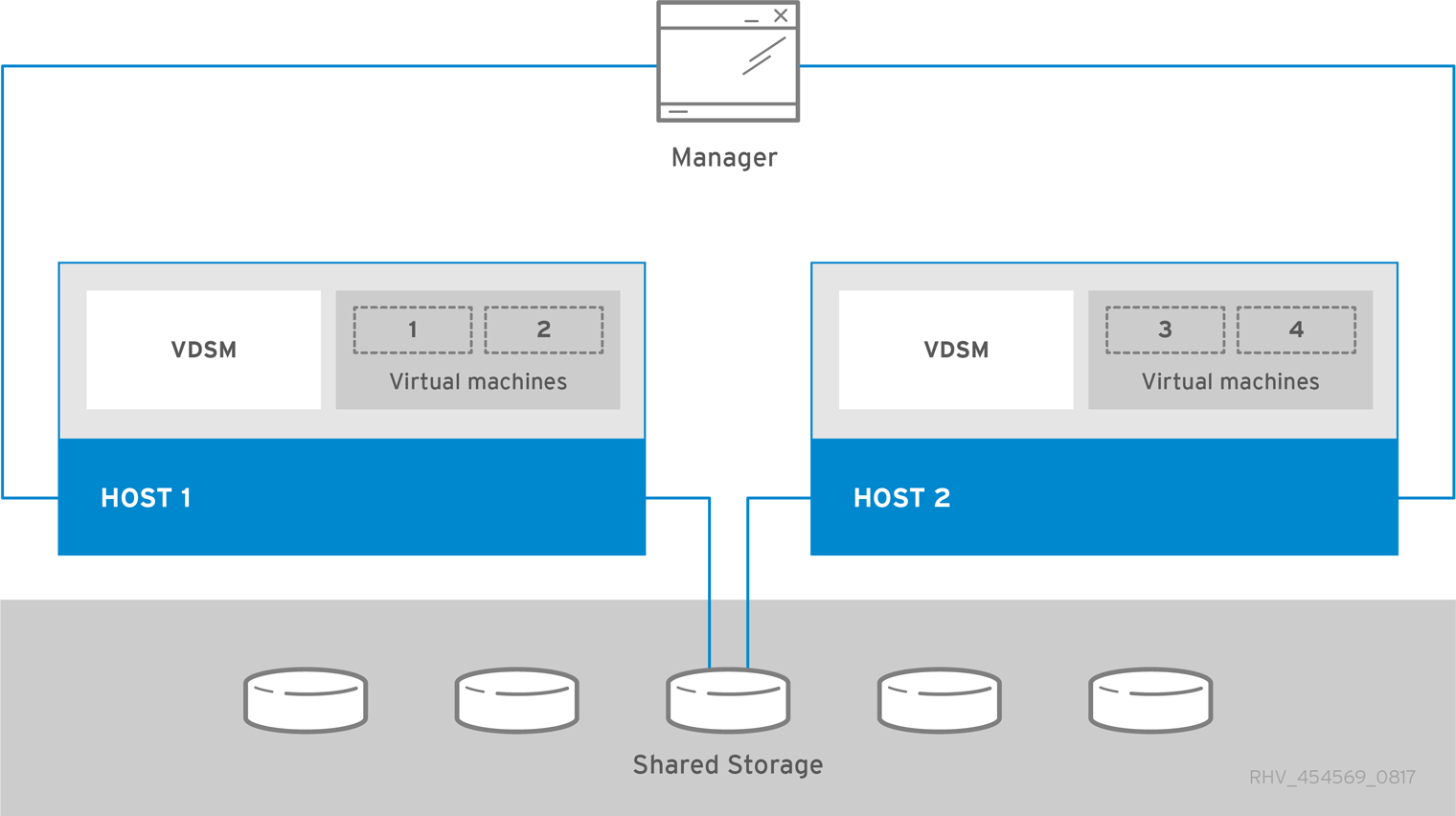

Standalone Engine Architecture

The oVirt Engine runs on a physical server, or a virtual machine hosted in a separate virtualization environment. A standalone Engine is easier to deploy and manage, but requires an additional physical server. The Engine is only highly available when managed externally with a supported High Availability Add-On.

The minimum setup for a standalone Engine environment includes:

-

One oVirt Engine machine. The Engine is typically deployed on a physical server. However, it can also be deployed on a virtual machine, as long as that virtual machine is hosted in a separate environment. The Engine must run on Enterprise Linux 9.

-

A minimum of two hosts for virtual machine high availability. You can use Enterprise Linux hosts or oVirt Nodes (oVirt Node). VDSM (the host agent) runs on all hosts to facilitate communication with the oVirt Engine.

-

One storage service, which can be hosted locally or on a remote server, depending on the storage type used. The storage service must be accessible to all hosts.

1. Installation Overview

Installing a standalone Engine environment with remote databases involves the following steps:

-

Install and configure the oVirt Engine:

-

Install two Enterprise Linux machines: one for the Engine, and one for the databases. The second machine will be referred to as the remote server.

-

Manually configure the Engine database on the remote server. You can also use this procedure to manually configure the Data Warehouse database if you do not want the Data Warehouse setup script to configure it automatically.

-

Install the Data Warehouse service and database on the remote server.

-

Connect to the Administration Portal to add hosts and storage domains.

-

-

Install hosts to run virtual machines on:

-

Use either host type, or both:

-

-

Prepare storage to use for storage domains. You can use one of the following storage types:

|

Keep the environment up to date. Since bug fixes for known issues are frequently released, use scheduled tasks to update the hosts and the Engine. |

2. Requirements

2.1. oVirt Engine Requirements

2.1.1. Hardware Requirements

The minimum and recommended hardware requirements outlined here are based on a typical small to medium-sized installation. The exact requirements vary between deployments based on sizing and load.

The oVirt Engine runs on Enterprise Linux operating systems like CentOS Linux Stream 9 or AlmaLinux 9

| Resource | Minimum | Recommended |

|---|---|---|

CPU |

A dual core x86_64 CPU. |

A quad core x86_64 CPU or multiple dual core x86_64 CPUs. |

Memory |

4 GB of available system RAM if Data Warehouse is not installed and if memory is not being consumed by existing processes. |

16 GB of system RAM. |

Hard Disk |

25 GB of locally accessible, writable disk space. |

50 GB of locally accessible, writable disk space. |

Network Interface |

1 Network Interface Card (NIC) with bandwidth of at least 1 Gbps. |

1 Network Interface Card (NIC) with bandwidth of at least 1 Gbps. |

2.1.2. Browser Requirements

The following browser versions and operating systems can be used to access the Administration Portal and the VM Portal.

Browser testing is divided into tiers:

-

Tier 1: Browser and operating system combinations that are fully tested.

-

Tier 2: Browser and operating system combinations that are partially tested, and are likely to work.

-

Tier 3: Browser and operating system combinations that are not tested, but may work.

| Support Tier | Operating System Family | Browser |

|---|---|---|

Tier 1 |

Enterprise Linux |

Mozilla Firefox Extended Support Release (ESR) version |

Any |

Most recent version of Google Chrome, Mozilla Firefox, or Microsoft Edge |

|

Tier 2 |

||

Tier 3 |

Any |

Earlier versions of Google Chrome or Mozilla Firefox |

Any |

Other browsers |

2.1.3. Client Requirements

Virtual machine consoles can only be accessed using supported Remote Viewer (virt-viewer) clients on Enterprise Linux and Windows. To install virt-viewer, see Installing Supporting Components on Client Machines in the Virtual Machine Management Guide. Installing virt-viewer requires Administrator privileges.

You can access virtual machine consoles using the SPICE, VNC, or RDP (Windows only) protocols. You can install the QXLDOD graphical driver in the guest operating system to improve the functionality of SPICE. SPICE currently supports a maximum resolution of 2560x1600 pixels.

Supported QXLDOD drivers are available on Enterprise Linux 7.2 and later, and Windows 10.

|

SPICE may work with Windows 8 or 8.1 using QXLDOD drivers, but it is neither certified nor tested. |

2.1.4. Operating System Requirements

The oVirt Engine must be installed on a base installation of Enterprise Linux 9 or later.

Do not install any additional packages after the base installation, as they may cause dependency issues when attempting to install the packages required by the Engine.

Do not enable additional repositories other than those required for the Engine installation.

2.2. Host Requirements

2.2.1. CPU Requirements

All CPUs must have support for the Intel® 64 or AMD64 CPU extensions, and the AMD-V™ or Intel VT® hardware virtualization extensions enabled. Support for the No eXecute flag (NX) is also required.

The following CPU models are supported on the latest cluster version(4.8):

-

AMD

-

Opteron G4

-

Opteron G5

-

EPYC

-

EPYC-Rome

-

EPYC-Milan

-

EPYC-Genoa

-

-

Intel

-

Nehalem

-

Westmere

-

SandyBridge

-

IvyBridge

-

Haswell

-

Broadwell

-

Skylake Client

-

Skylake Server

-

Cascadelake Server

-

IceLake Server

-

Sapphire Rapids Server

-

-

IBM

-

POWER8

-

POWER9

-

POWER10

-

Enterprise Linux 9 doesn’t support virtualization for ppc64le: CentOS Virtualization SIG is working on re-introducing virtualization support but it’s not ready yet.

The oVirt project also provides packages for the 64-bit ARM architecture (ARM 64) but only as a Technology Preview.

For each CPU model with security updates, the CPU Type lists a basic type and a secure type. For example:

-

Intel Cascadelake Server Family

-

Secure Intel Cascadelake Server Family

The Secure CPU type contains the latest updates. For details, see BZ#1731395

Checking if a Processor Supports the Required Flags

You must enable virtualization in the BIOS. Power off and reboot the host after this change to ensure that the change is applied.

-

At the Enterprise Linux or oVirt Node boot screen, press any key and select the Boot or Boot with serial console entry from the list.

-

Press

Tabto edit the kernel parameters for the selected option. -

Ensure there is a space after the last kernel parameter listed, and append the parameter

rescue. -

Press

Enterto boot into rescue mode. -

At the prompt, determine that your processor has the required extensions and that they are enabled by running this command:

# grep -E 'svm|vmx' /proc/cpuinfo | grep nx

If any output is shown, the processor is hardware virtualization capable. If no output is shown, your processor may still support hardware virtualization; in some circumstances manufacturers disable the virtualization extensions in the BIOS. If you believe this to be the case, consult the system’s BIOS and the motherboard manual provided by the manufacturer.

2.2.2. Memory Requirements

The minimum required RAM is 2 GB. For cluster levels 4.2 to 4.5, the maximum supported RAM per VM in oVirt Node is 6 TB. For cluster levels 4.6 to 4.7, the maximum supported RAM per VM in oVirt Node is 16 TB.

However, the amount of RAM required varies depending on guest operating system requirements, guest application requirements, and guest memory activity and usage. KVM can also overcommit physical RAM for virtualized guests, allowing you to provision guests with RAM requirements greater than what is physically present, on the assumption that the guests are not all working concurrently at peak load. KVM does this by only allocating RAM for guests as required and shifting underutilized guests into swap.

2.2.3. Storage Requirements

Hosts require storage to store configuration, logs, kernel dumps, and for use as swap space. Storage can be local or network-based. oVirt Node (oVirt Node) can boot with one, some, or all of its default allocations in network storage. Booting from network storage can result in a freeze if there is a network disconnect. Adding a drop-in multipath configuration file can help address losses in network connectivity. If oVirt Node boots from SAN storage and loses connectivity, the files become read-only until network connectivity restores. Using network storage might result in a performance downgrade.

The minimum storage requirements of oVirt Node are documented in this section. The storage requirements for Enterprise Linux hosts vary based on the amount of disk space used by their existing configuration but are expected to be greater than those of oVirt Node.

The minimum storage requirements for host installation are listed below. However, use the default allocations, which use more storage space.

-

/ (root) - 6 GB

-

/home - 1 GB

-

/tmp - 1 GB

-

/boot - 1 GB

-

/var - 5 GB

-

/var/crash - 10 GB

-

/var/log - 8 GB

-

/var/log/audit - 2 GB

-

/var/tmp - 10 GB

-

swap - 1 GB.

-

Anaconda reserves 20% of the thin pool size within the volume group for future metadata expansion. This is to prevent an out-of-the-box configuration from running out of space under normal usage conditions. Overprovisioning of thin pools during installation is also not supported.

-

Minimum Total - 64 GiB

If you are also installing the Engine Appliance for self-hosted engine installation, /var/tmp must be at least 10 GB.

If you plan to use memory overcommitment, add enough swap space to provide virtual memory for all of virtual machines. See Memory Optimization.

2.2.4. PCI Device Requirements

Hosts must have at least one network interface with a minimum bandwidth of 1 Gbps. Each host should have two network interfaces, with one dedicated to supporting network-intensive activities, such as virtual machine migration. The performance of such operations is limited by the bandwidth available.

For information about how to use PCI Express and conventional PCI devices with Intel Q35-based virtual machines, see Q35 and PCI vs PCI Express in Q35.

2.2.5. Device Assignment Requirements

If you plan to implement device assignment and PCI passthrough so that a virtual machine can use a specific PCIe device from a host, ensure the following requirements are met:

-

CPU must support IOMMU (for example, VT-d or AMD-Vi). IBM POWER8 supports IOMMU by default.

-

Firmware must support IOMMU.

-

CPU root ports used must support ACS or ACS-equivalent capability.

-

PCIe devices must support ACS or ACS-equivalent capability.

-

All PCIe switches and bridges between the PCIe device and the root port should support ACS. For example, if a switch does not support ACS, all devices behind that switch share the same IOMMU group, and can only be assigned to the same virtual machine.

-

For GPU support, Enterprise Linux 8 supports PCI device assignment of PCIe-based NVIDIA K-Series Quadro (model 2000 series or higher), GRID, and Tesla as non-VGA graphics devices. Currently up to two GPUs may be attached to a virtual machine in addition to one of the standard, emulated VGA interfaces. The emulated VGA is used for pre-boot and installation and the NVIDIA GPU takes over when the NVIDIA graphics drivers are loaded. Note that the NVIDIA Quadro 2000 is not supported, nor is the Quadro K420 card.

Check vendor specification and datasheets to confirm that your hardware meets these requirements. The lspci -v command can be used to print information for PCI devices already installed on a system.

2.2.6. vGPU Requirements

A host must meet the following requirements in order for virtual machines on that host to use a vGPU:

-

vGPU-compatible GPU

-

GPU-enabled host kernel

-

Installed GPU with correct drivers

-

Select a vGPU type and the number of instances that you would like to use with this virtual machine using the Manage vGPU dialog in the Administration Portal Host Devices tab of the virtual machine.

-

vGPU-capable drivers installed on each host in the cluster

-

vGPU-supported virtual machine operating system with vGPU drivers installed

2.3. Networking requirements

2.3.1. General requirements

oVirt requires IPv6 to remain enabled on the physical or virtual machine running the Engine. Do not disable IPv6 on the Engine machine, even if your systems do not use it.

2.3.2. Firewall Requirements for DNS, NTP, and IPMI Fencing

The firewall requirements for all of the following topics are special cases that require individual consideration.

oVirt does not create a DNS or NTP server, so the firewall does not need to have open ports for incoming traffic.

By default, Enterprise Linux allows outbound traffic to DNS and NTP on any destination address. If you disable outgoing traffic, define exceptions for requests that are sent to DNS and NTP servers.

|

For IPMI (Intelligent Platform Management Interface) and other fencing mechanisms, the firewall does not need to have open ports for incoming traffic.

By default, Enterprise Linux allows outbound IPMI traffic to ports on any destination address. If you disable outgoing traffic, make exceptions for requests being sent to your IPMI or fencing servers.

Each oVirt Node and Enterprise Linux host in the cluster must be able to connect to the fencing devices of all other hosts in the cluster. If the cluster hosts are experiencing an error (network error, storage error…) and cannot function as hosts, they must be able to connect to other hosts in the data center.

The specific port number depends on the type of the fence agent you are using and how it is configured.

The firewall requirement tables in the following sections do not represent this option.

2.3.3. oVirt Engine Firewall Requirements

The oVirt Engine requires that a number of ports be opened to allow network traffic through the system’s firewall.

The engine-setup script can configure the firewall automatically.

The firewall configuration documented here assumes a default configuration.

| ID | Port(s) | Protocol | Source | Destination | Purpose | Encrypted by default |

|---|---|---|---|---|---|---|

M1 |

- |

ICMP |

oVirt Nodes Enterprise Linux hosts |

oVirt Engine |

Optional. May help in diagnosis. |

No |

M2 |

22 |

TCP |

System(s) used for maintenance of the Engine including backend configuration, and software upgrades. |

oVirt Engine |

Secure Shell (SSH) access. Optional. |

Yes |

M3 |

2222 |

TCP |

Clients accessing virtual machine serial consoles. |

oVirt Engine |

Secure Shell (SSH) access to enable connection to virtual machine serial consoles. |

Yes |

M4 |

80, 443 |

TCP |

Administration Portal clients VM Portal clients oVirt Nodes Enterprise Linux hosts REST API clients |

oVirt Engine |

Provides HTTP (port 80, not encrypted) and HTTPS (port 443, encrypted) access to the Engine. HTTP redirects connections to HTTPS. |

Yes |

M5 |

6100 |

TCP |

Administration Portal clients VM Portal clients |

oVirt Engine |

Provides websocket proxy access for a web-based console client, |

No |

M6 |

7410 |

UDP |

oVirt Nodes Enterprise Linux hosts |

oVirt Engine |

If Kdump is enabled on the hosts, open this port for the fence_kdump listener on the Engine. See fence_kdump Advanced Configuration. |

No |

M7 |

54323 |

TCP |

Administration Portal clients |

oVirt Engine ( |

Required for communication with the |

Yes |

M8 |

6642 |

TCP |

oVirt Nodes Enterprise Linux hosts |

Open Virtual Network (OVN) southbound database |

Connect to Open Virtual Network (OVN) database |

Yes |

M9 |

9696 |

TCP |

Clients of external network provider for OVN |

External network provider for OVN |

OpenStack Networking API |

Yes, with configuration generated by engine-setup. |

M10 |

35357 |

TCP |

Clients of external network provider for OVN |

External network provider for OVN |

OpenStack Identity API |

Yes, with configuration generated by engine-setup. |

M11 |

53 |

TCP, UDP |

oVirt Engine |

DNS Server |

DNS lookup requests from ports above 1023 to port 53, and responses. Open by default. |

No |

M12 |

123 |

UDP |

oVirt Engine |

NTP Server |

NTP requests from ports above 1023 to port 123, and responses. Open by default. |

No |

|

2.3.4. Host Firewall Requirements

Enterprise Linux hosts and oVirt Nodes (oVirt Node) require a number of ports to be opened to allow network traffic through the system’s firewall. The firewall rules are automatically configured by default when adding a new host to the Engine, overwriting any pre-existing firewall configuration.

To disable automatic firewall configuration when adding a new host, clear the Automatically configure host firewall check box under Advanced Parameters.

| ID | Port(s) | Protocol | Source | Destination | Purpose | Encrypted by default |

|---|---|---|---|---|---|---|

H1 |

22 |

TCP |

oVirt Engine |

oVirt Nodes Enterprise Linux hosts |

Secure Shell (SSH) access. Optional. |

Yes |

H2 |

2223 |

TCP |

oVirt Engine |

oVirt Nodes Enterprise Linux hosts |

Secure Shell (SSH) access to enable connection to virtual machine serial consoles. |

Yes |

H3 |

161 |

UDP |

oVirt Nodes Enterprise Linux hosts |

oVirt Engine |

Simple network management protocol (SNMP). Only required if you want Simple Network Management Protocol traps sent from the host to one or more external SNMP managers. Optional. |

No |

H4 |

111 |

TCP |

NFS storage server |

oVirt Nodes Enterprise Linux hosts |

NFS connections. Optional. |

No |

H5 |

5900 - 6923 |

TCP |

Administration Portal clients VM Portal clients |

oVirt Nodes Enterprise Linux hosts |

Remote guest console access via VNC and SPICE. These ports must be open to facilitate client access to virtual machines. |

Yes (optional) |

H6 |

5989 |

TCP, UDP |

Common Information Model Object Manager (CIMOM) |

oVirt Nodes Enterprise Linux hosts |

Used by Common Information Model Object Managers (CIMOM) to monitor virtual machines running on the host. Only required if you want to use a CIMOM to monitor the virtual machines in your virtualization environment. Optional. |

No |

H7 |

9090 |

TCP |

oVirt Engine Client machines |

oVirt Nodes Enterprise Linux hosts |

Required to access the Cockpit web interface, if installed. |

Yes |

H8 |

16514 |

TCP |

oVirt Nodes Enterprise Linux hosts |

oVirt Nodes Enterprise Linux hosts |

Virtual machine migration using libvirt. |

Yes |

H9 |

49152 - 49215 |

TCP |

oVirt Nodes Enterprise Linux hosts |

oVirt Nodes Enterprise Linux hosts |

Virtual machine migration and fencing using VDSM. These ports must be open to facilitate both automated and manual migration of virtual machines. |

Yes. Depending on agent for fencing, migration is done through libvirt. |

H10 |

54321 |

TCP |

oVirt Engine oVirt Nodes Enterprise Linux hosts |

oVirt Nodes Enterprise Linux hosts |

VDSM communications with the Engine and other virtualization hosts. |

Yes |

H11 |

54322 |

TCP |

oVirt Engine |

oVirt Nodes Enterprise Linux hosts |

Required for communication with the |

Yes |

H12 |

6081 |

UDP |

oVirt Nodes Enterprise Linux hosts |

oVirt Nodes Enterprise Linux hosts |

Required, when Open Virtual Network (OVN) is used as a network provider, to allow OVN to create tunnels between hosts. |

No |

H13 |

53 |

TCP, UDP |

oVirt Nodes Enterprise Linux hosts |

DNS Server |

DNS lookup requests from ports above 1023 to port 53, and responses. This port is required and open by default. |

No |

H14 |

123 |

UDP |

oVirt Nodes Enterprise Linux hosts |

NTP Server |

NTP requests from ports above 1023 to port 123, and responses. This port is required and open by default. |

|

H15 |

4500 |

TCP, UDP |

oVirt Nodes |

oVirt Nodes |

Internet Security Protocol (IPSec) |

Yes |

H16 |

500 |

UDP |

oVirt Nodes |

oVirt Nodes |

Internet Security Protocol (IPSec) |

Yes |

H17 |

- |

AH, ESP |

oVirt Nodes |

oVirt Nodes |

Internet Security Protocol (IPSec) |

Yes |

|

By default, Enterprise Linux allows outbound traffic to DNS and NTP on any destination address. If you disable outgoing traffic, make exceptions for the oVirt Nodes Enterprise Linux hosts to send requests to DNS and NTP servers. Other nodes may also require DNS and NTP. In that case, consult the requirements for those nodes and configure the firewall accordingly. |

2.3.5. Database Server Firewall Requirements

oVirt supports the use of a remote database server for the Engine database (engine) and the Data Warehouse database (ovirt-engine-history). If you plan to use a remote database server, it must allow connections from the Engine and the Data Warehouse service (which can be separate from the Engine).

Similarly, if you plan to access a local or remote Data Warehouse database from an external system, the database must allow connections from that system.

|

Accessing the Engine database from external systems is not supported. |

| ID | Port(s) | Protocol | Source | Destination | Purpose | Encrypted by default |

|---|---|---|---|---|---|---|

D1 |

5432 |

TCP, UDP |

oVirt Engine Data Warehouse service |

Engine ( Data Warehouse ( |

Default port for PostgreSQL database connections. |

|

D2 |

5432 |

TCP, UDP |

External systems |

Data Warehouse ( |

Default port for PostgreSQL database connections. |

Disabled by default. No, but can be enabled. |

2.3.6. Maximum Transmission Unit Requirements

The recommended Maximum Transmission Units (MTU) setting for Hosts during deployment is 1500. It is possible to update this setting after the environment is set up to a different MTU. Starting with oVirt 4.2, these changes can be made from the Admin Portal, however will require a reboot of the Hosted Engine VM and any other VM’s using the management network should be powered down first.

-

Shutdown or unplug the vNIcs of all VM’s that use the management network except for Engine.

-

Change the MTU via Admin Portal - Network → Networks → Select the management network → Edit → MTU

-

Enable Global Maintenance:

# hosted-engine --set-maintenance --mode=global-

Then shutdown the HE VM:

# hosted-engine --vm-shutdown-

Check the status to confirm it is down:

# hosted-engine --vm-status-

Start the VM again:

# hosted-engine --vm-start-

Check the status again to ensure it is back up and try to migrate the HE VM, the MTU value should persist through migrations.

-

If everything looks OK, disable Global Maintenance:

# hosted-engine --set-maintenance --mode=noneNote: Only the Engine VM can be using the management network while making these changes (all other VM’s using the management network should be down), otherwise the config does not come into effect immediately, and causes the VM to boot yet again with wrong MTU even after the change.

3. Installing the oVirt Engine

3.1. Installing the oVirt Engine Machine and the Remote Server

-

The oVirt Engine must run on Enterprise Linux 9 or later. For detailed installation instructions, see Performing a standard EL installation.

This machine must meet the minimum Engine hardware requirements.

-

Install a second Enterprise Linux machine to use for the databases. This machine will be referred to as the remote server.

3.2. Enabling the oVirt Engine Repositories

Ensure the correct repositories are enabled.

For oVirt 4.5: If you are going to install on RHEL or derivatives please follow Installing on RHEL or derivatives first.

# dnf install -y centos-release-ovirt45|

As discussed in oVirt Users mailing list we suggest the user community to use oVirt master snapshot repositories ensuring that the latest fixes for the platform regressions will be promptly available. |

For oVirt 4.4:

Common procedure valid for both 4.4 and 4.5 on Enterprise Linux 8 only:

You can check which repositories are currently enabled by running dnf repolist.

-

Enable the

javapackages-toolsmodule.# dnf module -y enable javapackages-tools -

Enable the

pki-depsmodule.# dnf module -y enable pki-deps -

Enable version 12 of the

postgresqlmodule.# dnf module -y enable postgresql:12 -

Enable version 2.3 of the

mod_auth_openidcmodule.# dnf module -y enable mod_auth_openidc:2.3 -

Enable version 14 of the

nodejsmodule:# dnf module -y enable nodejs:14 -

Synchronize installed packages to update them to the latest available versions.

# dnf distro-sync --nobest

For information on modules and module streams, see the following sections in Installing, managing, and removing user-space components

Before configuring the oVirt Engine, you must manually configure the Engine database on the remote server. You can also use this procedure to manually configure the Data Warehouse database if you do not want the Data Warehouse setup script to configure it automatically.

3.3. Preparing a Remote PostgreSQL Database

In a remote database environment, you must create the Engine database manually before running engine-setup.

|

The The locale settings in the |

|

The database name must contain only numbers, underscores, and lowercase letters. |

Enabling the oVirt Engine Repositories

Ensure the correct repositories are enabled.

For oVirt 4.5: If you are going to install on RHEL or derivatives please follow Installing on RHEL or derivatives first.

# dnf install -y centos-release-ovirt45|

As discussed in oVirt Users mailing list we suggest the user community to use oVirt master snapshot repositories ensuring that the latest fixes for the platform regressions will be promptly available. |

For oVirt 4.4:

Common procedure valid for both 4.4 and 4.5 on Enterprise Linux 8 only:

You can check which repositories are currently enabled by running dnf repolist.

-

Enable the

javapackages-toolsmodule.# dnf module -y enable javapackages-tools -

Enable version 12 of the

postgresqlmodule.# dnf module -y enable postgresql:12 -

Enable version 2.3 of the

mod_auth_openidcmodule.# dnf module -y enable mod_auth_openidc:2.3 -

Enable version 14 of the

nodejsmodule:# dnf module -y enable nodejs:14 -

Synchronize installed packages to update them to the latest available versions.

# dnf distro-sync --nobest

For information on modules and module streams, see the following sections in Installing, managing, and removing user-space components

Initializing the PostgreSQL Database

-

Install the PostgreSQL server package:

# dnf install postgresql-server postgresql-contrib -

Initialize the PostgreSQL database instance:

# postgresql-setup --initdb

-

Enable the

postgresqlservice and configure it to start when the machine boots:# systemctl enable postgresql # systemctl start postgresql

-

Connect to the

psqlcommand line interface as thepostgresuser:# su - postgres -c psql

-

Create a default user. The Engine’s default user is

engine:postgres=# create role user_name with login encrypted password 'password'; -

Create a database. The Engine’s default database name is

engine:postgres=# create database database_name owner user_name template template0 encoding 'UTF8' lc_collate 'en_US.UTF-8' lc_ctype 'en_US.UTF-8'; -

Connect to the new database:

postgres=# \c database_name -

Add the

uuid-osspextension:database_name=# CREATE EXTENSION "uuid-ossp"; -

Add the

plpgsqllanguage if it does not exist:database_name=# CREATE LANGUAGE plpgsql; -

Quit the

psqlinterface:database_name=# \q -

Edit the

/var/lib/pgsql/data/pg_hba.conffile to enable md5 client authentication, so that the engine can access the database remotely. Add the following line immediately below the line that starts withlocalat the bottom of the file. ReplaceX.X.X.Xwith the IP address of the Engine or Data Warehouse machine, and replace0-32or0-128with the CIDR mask length:host database_name user_name X.X.X.X/0-32 md5 host database_name user_name X.X.X.X::/0-128 md5For example:

# IPv4, 32-bit address: host engine engine 192.168.12.10/32 md5 # IPv6, 128-bit address: host engine engine fe80::7a31:c1ff:0000:0000/96 md5 -

Allow TCP/IP connections to the database. Edit the

/var/lib/pgsql/data/postgresql.conffile and add the following line:listen_addresses='*'This example configures the

postgresqlservice to listen for connections on all interfaces. You can specify an interface by giving its IP address. -

Update the PostgreSQL server’s configuration. In the

/var/lib/pgsql/data/postgresql.conffile, add the following lines to the bottom of the file:autovacuum_vacuum_scale_factor=0.01 autovacuum_analyze_scale_factor=0.075 autovacuum_max_workers=6 maintenance_work_mem=65536 max_connections=150 work_mem=8192 -

Open the default port used for PostgreSQL database connections, and save the updated firewall rules:

# firewall-cmd --zone=public --add-service=postgresql # firewall-cmd --permanent --zone=public --add-service=postgresql -

Restart the

postgresqlservice:# systemctl restart postgresql -

Optionally, set up SSL to secure database connections.

3.4. Installing and Configuring the oVirt Engine

Install the package and dependencies for the oVirt Engine, and configure it using the engine-setup command. The script asks you a series of questions and, after you provide the required values for all questions, applies that configuration and starts the ovirt-engine service.

|

The You can run |

-

Ensure all packages are up to date:

# dnf upgrade --nobestReboot the machine if any kernel-related packages were updated.

-

Install the

ovirt-enginepackage and dependencies.# dnf install ovirt-engine -

Run the

engine-setupcommand to begin configuring the oVirt Engine:# engine-setup -

Optional: Type Yes and press

Enterto set up Cinderlib integration on this machine:Set up Cinderlib integration (Currently in tech preview) (Yes, No) [No]:Cinderlib is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs), might not be functionally complete, and Red Hat does not recommend to use them for production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. For more information on Red Hat Technology Preview features support scope, see Red Hat Technology Preview Features Support Scope.

-

Press

Enterto configure the Engine on this machine:Configure Engine on this host (Yes, No) [Yes]: -

Optional: Install Open Virtual Network (OVN). Selecting

Yesinstalls an OVN server on the Engine machine and adds it to oVirt as an external network provider. This action also configures the Default cluster to use OVN as its default network provider.Also see the "Next steps" in Adding Open Virtual Network (OVN) as an External Network Provider in the Administration Guide.

Configuring ovirt-provider-ovn also sets the Default cluster’s default network provider to ovirt-provider-ovn. Non-Default clusters may be configured with an OVN after installation. Configure ovirt-provider-ovn (Yes, No) [Yes]:For more information on using OVN networks in oVirt, see Adding Open Virtual Network (OVN) as an External Network Provider in the Administration Guide.

-

Optional: Allow

engine-setupto configure a WebSocket Proxy server for allowing users to connect to virtual machines through thenoVNCconsole:Configure WebSocket Proxy on this machine? (Yes, No) [Yes]: -

To configure Data Warehouse on a remote server, answer

Noand see Installing and Configuring Data Warehouse on a Separate Machine after completing the Engine configuration.Please note: Data Warehouse is required for the engine. If you choose to not configure it on this host, you have to configure it on a remote host, and then configure the engine on this host so that it can access the database of the remote Data Warehouse host. Configure Data Warehouse on this host (Yes, No) [Yes]:oVirt only supports installing the Data Warehouse database, the Data Warehouse service, and Grafana all on the same machine as each other.

-

To configure Grafana on the same machine as the Data Warehouse service, enter

No:Configure Grafana on this host (Yes, No) [Yes]: -

Optional: Allow access to a virtual machine’s serial console from the command line.

Configure VM Console Proxy on this host (Yes, No) [Yes]:Additional configuration is required on the client machine to use this feature. See Opening a Serial Console to a Virtual Machine in the Virtual Machine Management Guide.

-

Press

Enterto accept the automatically detected host name, or enter an alternative host name and pressEnter. Note that the automatically detected host name may be incorrect if you are using virtual hosts.Host fully qualified DNS name of this server [autodetected host name]: -

The

engine-setupcommand checks your firewall configuration and offers to open the ports used by the Engine for external communication, such as ports 80 and 443. If you do not allowengine-setupto modify your firewall configuration, you must manually open the ports used by the Engine.firewalldis configured as the firewall manager.Setup can automatically configure the firewall on this system. Note: automatic configuration of the firewall may overwrite current settings. Do you want Setup to configure the firewall? (Yes, No) [Yes]:If you choose to automatically configure the firewall, and no firewall managers are active, you are prompted to select your chosen firewall manager from a list of supported options. Type the name of the firewall manager and press

Enter. This applies even in cases where only one option is listed. -

Specify whether to configure the Engine database on this machine, or on another machine:

Where is the Engine database located? (Local, Remote) [Local]:Deployment with a remote engine database is now deprecated. This functionality will be removed in a future release.

If you select

Remote, input the following values for the preconfigured remote database server. Replacelocalhostwith the ip address or FQDN of the remote database server:Engine database host [localhost]: Engine database port [5432]: Engine database secured connection (Yes, No) [No]: Engine database name [engine]: Engine database user [engine]: Engine database password: -

Set a password for the automatically created administrative user of the oVirt Engine:

Engine admin password: Confirm engine admin password: -

Select Gluster, Virt, or Both:

Application mode (Both, Virt, Gluster) [Both]:-

Both - offers the greatest flexibility. In most cases, select Both.

-

Virt - allows you to run virtual machines in the environment.

-

Gluster - only allows you to manage GlusterFS from the Administration Portal.

-

-

If you installed the OVN provider, you can choose to use the default credentials, or specify an alternative.

Use default credentials (admin@internal) for ovirt-provider-ovn (Yes, No) [Yes]: oVirt OVN provider user[admin@internal]: oVirt OVN provider password: -

Set the default value for the

wipe_after_deleteflag, which wipes the blocks of a virtual disk when the disk is deleted.Default SAN wipe after delete (Yes, No) [No]: -

The Engine uses certificates to communicate securely with its hosts. This certificate can also optionally be used to secure HTTPS communications with the Engine. Provide the organization name for the certificate:

Organization name for certificate [autodetected domain-based name]: -

Optionally allow

engine-setupto make the landing page of the Engine the default page presented by the Apache web server:Setup can configure the default page of the web server to present the application home page. This may conflict with existing applications. Do you wish to set the application as the default web page of the server? (Yes, No) [Yes]: -

By default, external SSL (HTTPS) communication with the Engine is secured with the self-signed certificate created earlier in the configuration to securely communicate with hosts. Alternatively, choose another certificate for external HTTPS connections; this does not affect how the Engine communicates with hosts:

Setup can configure apache to use SSL using a certificate issued from the internal CA. Do you wish Setup to configure that, or prefer to perform that manually? (Automatic, Manual) [Automatic]: -

You can specify a unique password for the Grafana admin user, or use same one as the Engine admin password:

Use Engine admin password as initial Grafana admin password (Yes, No) [Yes]: -

Review the installation settings, and press

Enterto accept the values and proceed with the installation:Please confirm installation settings (OK, Cancel) [OK]:

When your environment has been configured, engine-setup displays details about how to access your environment.

If you chose to manually configure the firewall, engine-setup provides a custom list of ports that need to be opened, based on the options selected during setup. engine-setup also saves your answers to a file that can be used to reconfigure the Engine using the same values, and outputs the location of the log file for the oVirt Engine configuration process.

-

If you intend to link your oVirt environment with a directory server, configure the date and time to synchronize with the system clock used by the directory server to avoid unexpected account expiry issues. See Synchronizing the System Clock with a Remote Server in the Enterprise Linux System Administrator’s Guide for more information.

-

Install the certificate authority according to the instructions provided by your browser. You can get the certificate authority’s certificate by navigating to

http://<manager-fqdn>/ovirt-engine/services/pki-resource?resource=ca-certificate&format=X509-PEM-CA, replacing <manager-fqdn> with the FQDN that you provided during the installation.

Install the Data Warehouse service and database on the remote server:

3.5. Installing and Configuring Data Warehouse on a Separate Machine

This section describes installing and configuring the Data Warehouse service on a separate machine from the oVirt Engine. Installing Data Warehouse on a separate machine helps to reduce the load on the Engine machine.

|

oVirt only supports installing the Data Warehouse database, the Data Warehouse service and Grafana all on the same machine as each other, even though you can install each of these components on separate machines from each other. |

-

The oVirt Engine is installed on a separate machine.

-

A physical server or virtual machine running Enterprise Linux 8.

-

The Engine database password.

Enabling the oVirt Engine Repositories

Ensure the correct repositories are enabled.

For oVirt 4.5: If you are going to install on RHEL or derivatives please follow Installing on RHEL or derivatives first.

# dnf install -y centos-release-ovirt45|

As discussed in oVirt Users mailing list we suggest the user community to use oVirt master snapshot repositories ensuring that the latest fixes for the platform regressions will be promptly available. |

For oVirt 4.4:

Common procedure valid for both 4.4 and 4.5 on Enterprise Linux 8 only:

You can check which repositories are currently enabled by running dnf repolist.

-

Enable the

javapackages-toolsmodule.# dnf module -y enable javapackages-tools -

Enable the

pki-depsmodule.# dnf module -y enable pki-deps -

Enable version 12 of the

postgresqlmodule.# dnf module -y enable postgresql:12 -

Enable version 2.3 of the

mod_auth_openidcmodule.# dnf module -y enable mod_auth_openidc:2.3 -

Enable version 14 of the

nodejsmodule:# dnf module -y enable nodejs:14 -

Synchronize installed packages to update them to the latest available versions.

# dnf distro-sync --nobest

For information on modules and module streams, see the following sections in Installing, managing, and removing user-space components

Installing Data Warehouse on a Separate Machine

-

Log in to the machine where you want to install the database.

-

Ensure that all packages are up to date:

# dnf upgrade --nobest -

Install the

ovirt-engine-dwh-setuppackage:# dnf install ovirt-engine-dwh-setup -

Run the

engine-setupcommand to begin the installation:# engine-setup -

Answer

Yesto install Data Warehouse on this machine:Configure Data Warehouse on this host (Yes, No) [Yes]: -

Answer

Yesto install Grafana on this machine:Configure Grafana on this host (Yes, No) [Yes]: -

Press

Enterto accept the automatically-detected host name, or enter an alternative host name and pressEnter:Host fully qualified DNS name of this server [autodetected hostname]: -

Press

Enterto automatically configure the firewall, or typeNoand pressEnterto maintain existing settings:Setup can automatically configure the firewall on this system. Note: automatic configuration of the firewall may overwrite current settings. Do you want Setup to configure the firewall? (Yes, No) [Yes]:If you choose to automatically configure the firewall, and no firewall managers are active, you are prompted to select your chosen firewall manager from a list of supported options. Type the name of the firewall manager and press

Enter. This applies even in cases where only one option is listed. -

Enter the fully qualified domain name of the Engine machine, and then press

Enter:Host fully qualified DNS name of the engine server []: -

Press

Enterto allow setup to sign the certificate on the Engine via SSH:Setup will need to do some actions on the remote engine server. Either automatically, using ssh as root to access it, or you will be prompted to manually perform each such action. Please choose one of the following: 1 - Access remote engine server using ssh as root 2 - Perform each action manually, use files to copy content around (1, 2) [1]: -

Press

Enterto accept the default SSH port, or enter an alternative port number and then pressEnter:ssh port on remote engine server [22]: -

Enter the root password for the Engine machine:

root password on remote engine server manager.example.com: -

Specify whether to host the Data Warehouse database on this machine (Local), or on another machine (Remote).:

oVirt only supports installing the Data Warehouse database, the Data Warehouse service and Grafana all on the same machine as each other, even though you can install each of these components on separate machines from each other.

Where is the DWH database located? (Local, Remote) [Local]:-

If you select

Local, theengine-setupscript can configure your database automatically (including adding a user and a database), or it can connect to a preconfigured local database:Setup can configure the local postgresql server automatically for the DWH to run. This may conflict with existing applications. Would you like Setup to automatically configure postgresql and create DWH database, or prefer to perform that manually? (Automatic, Manual) [Automatic]:-

If you select

Automaticby pressingEnter, no further action is required here. -

If you select

Manual, input the following values for the manually-configured local database:DWH database secured connection (Yes, No) [No]: DWH database name [ovirt_engine_history]: DWH database user [ovirt_engine_history]: DWH database password:

-

-

If you select

Remote, you are prompted to provide details about the remote database host. Input the following values for the preconfigured remote database host:DWH database host []: dwh-db-fqdn DWH database port [5432]: DWH database secured connection (Yes, No) [No]: DWH database name [ovirt_engine_history]: DWH database user [ovirt_engine_history]: DWH database password: password -

If you select

Remote, you are prompted to enter the username and password for the Grafana database user:Grafana database user [ovirt_engine_history_grafana]: Grafana database password:

-

-

Enter the fully qualified domain name and password for the Engine database machine. If you are installing the Data Warehouse database on the same machine where the Engine database is installed, use the same FQDN. Press

Enterto accept the default values in each other field:Engine database host []: engine-db-fqdn Engine database port [5432]: Engine database secured connection (Yes, No) [No]: Engine database name [engine]: Engine database user [engine]: Engine database password: password -

Choose how long Data Warehouse will retain collected data:

Please choose Data Warehouse sampling scale: (1) Basic (2) Full (1, 2)[1]:Fulluses the default values for the data storage settings listed in Application Settings for the Data Warehouse service in ovirt-engine-dwhd.conf (recommended when Data Warehouse is installed on a remote host).Basicreduces the values ofDWH_TABLES_KEEP_HOURLYto720andDWH_TABLES_KEEP_DAILYto0, easing the load on the Engine machine. UseBasicwhen the Engine and Data Warehouse are installed on the same machine. -

Confirm your installation settings:

Please confirm installation settings (OK, Cancel) [OK]: -

After the Data Warehouse configuration is complete, on the oVirt Engine, restart the

ovirt-engineservice:# systemctl restart ovirt-engine -

Optionally, set up SSL to secure database connections.

Log in to the Administration Portal, where you can add hosts and storage to the environment:

3.6. Connecting to the Administration Portal

Access the Administration Portal using a web browser.

-

In a web browser, navigate to

https://manager-fqdn/ovirt-engine, replacing manager-fqdn with the FQDN that you provided during installation.You can access the Administration Portal using alternate host names or IP addresses. To do so, you need to add a configuration file under /etc/ovirt-engine/engine.conf.d/. For example:

# vi /etc/ovirt-engine/engine.conf.d/99-custom-sso-setup.conf SSO_ALTERNATE_ENGINE_FQDNS="_alias1.example.com alias2.example.com_"The list of alternate host names needs to be separated by spaces. You can also add the IP address of the Engine to the list, but using IP addresses instead of DNS-resolvable host names is not recommended.

-

Click Administration Portal. An SSO login page displays. SSO login enables you to log in to the Administration and VM Portal at the same time.

-

Enter your User Name and Password. If you are logging in for the first time, use the user name admin along with the password that you specified during installation.

-

Select the Domain to authenticate against. If you are logging in using the internal admin user name, select the internal domain.

-

Click Log In.

-

You can view the Administration Portal in multiple languages. The default selection is chosen based on the locale settings of your web browser. If you want to view the Administration Portal in a language other than the default, select your preferred language from the drop-down list on the welcome page.

To log out of the oVirt Administration Portal, click your user name in the header bar and click Sign Out. You are logged out of all portals and the Engine welcome screen displays.

4. Installing Hosts for oVirt

oVirt supports two types of hosts: oVirt Nodes (oVirt Node) and Enterprise Linux hosts. Depending on your environment, you may want to use one type only, or both. At least two hosts are required for features such as migration and high availability.

See Recommended practices for configuring host networks for networking information.

|

SELinux is in enforcing mode upon installation. To verify, run |

| Host Type | Other Names | Description |

|---|---|---|

oVirt Node |

oVirt Node, thin host |

This is a minimal operating system based on Enterprise Linux. It is distributed as an ISO file from the Customer Portal and contains only the packages required for the machine to act as a host. |

Enterprise Linux host |

Enterprise Linux host, thick host |

Enterprise Linux systems with the appropriate repositories enabled can be used as hosts. |

When you create a new data center, you can set the compatibility version. Select the compatibility version that suits all the hosts in the data center. Once set, version regression is not allowed. For a fresh oVirt installation, the latest compatibility version is set in the default data center and default cluster; to use an earlier compatibility version, you must create additional data centers and clusters.

4.1. oVirt Nodes

4.1.1. Installing oVirt Nodes

oVirt Node (oVirt Node) is a minimal operating system based on Enterprise Linux that is designed to provide a simple method for setting up a physical machine to act as a hypervisor in a oVirt environment. The minimal operating system contains only the packages required for the machine to act as a hypervisor, and features a Cockpit web interface for monitoring the host and performing administrative tasks. See Running Cockpit for the minimum browser requirements.

oVirt Node supports NIST 800-53 partitioning requirements to improve security. oVirt Node uses a NIST 800-53 partition layout by default.

The host must meet the minimum host requirements.

|

When installing or reinstalling the host’s operating system, oVirt strongly recommends that you first detach any existing non-OS storage that is attached to the host to avoid accidental initialization of these disks, and with that, potential data loss. |

-

Visit the oVirt Node Download page.

-

Choose the version of oVirt Node to download and click its Installation ISO link.

-

Write the oVirt Node Installation ISO disk image to a USB, CD, or DVD.

-

Start the machine on which you are installing oVirt Node, booting from the prepared installation media.

-

From the boot menu, select Install oVirt Node 4.5 and press

Enter.You can also press the

Tabkey to edit the kernel parameters. Kernel parameters must be separated by a space, and you can boot the system using the specified kernel parameters by pressing theEnterkey. Press theEsckey to clear any changes to the kernel parameters and return to the boot menu. -

Select a language, and click Continue.

-

Select a keyboard layout from the Keyboard Layout screen and click Done.

-

Select the device on which to install oVirt Node from the Installation Destination screen. Optionally, enable encryption. Click Done.

Use the Automatically configure partitioning option.

-

Select a time zone from the Time & Date screen and click Done.

-

Select a network from the Network & Host Name screen and click Configure… to configure the connection details.

To use the connection every time the system boots, select the Connect automatically with priority check box. For more information, see Configuring network and host name options in the Enterprise Linux 8 Installation Guide.

Enter a host name in the Host Name field, and click Done.

-

Optional: Configure Security Policy and Kdump. See Customizing your RHEL installation using the GUI in Performing a standard RHEL installation for Enterprise Linux 8 for more information on each of the sections in the Installation Summary screen.

-

Click Begin Installation.

-

Set a root password and, optionally, create an additional user while oVirt Node installs.

Do not create untrusted users on oVirt Node, as this can lead to exploitation of local security vulnerabilities.

-

Click Reboot to complete the installation.

When oVirt Node restarts,

nodectl checkperforms a health check on the host and displays the result when you log in on the command line. The messagenode status: OKornode status: DEGRADEDindicates the health status. Runnodectl checkto get more information.If necessary, you can prevent kernel modules from loading automatically.

4.1.2. Installing a third-party package on oVirt-node

If you need a package that is not included in the oVirt provided repository, you need to provide the repository before you can install the package.

-

The path to the repository that includes the package you want to install.

-

You are logged in to the host with root permissions.

-

Open an existing

.repofile or create a new one in/etc/yum.repos.d/. -

Add an entry to the

.repofile. For example, to installsssd-ldap, add the following entry to a new.repofile namethird-party.repo:# imgbased: set-enabled [custom-sssd-ldap] name = Provides sssd-ldap mirrorlist=http://mirrorlist.centos.org/?release=$stream&arch=$basearch&repo=BaseOS&infra=$infra #baseurl=http://mirror.centos.org/$contentdir/$stream/BaseOS/$basearch/os/ gpgcheck=1 enabled=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial includepkgs = sssd-ldap -

Install ` sssd-ldap`:

# dnf install sssd-ldap

4.1.3. Advanced Installation

Custom Partitioning

Custom partitioning on oVirt Node (oVirt Node) is not recommended. Use the Automatically configure partitioning option in the Installation Destination window.

If your installation requires custom partitioning, select the I will configure partitioning option during the installation, and note that the following restrictions apply:

-

Ensure the default LVM Thin Provisioning option is selected in the Manual Partitioning window.

-

The following directories are required and must be on thin provisioned logical volumes:

-

root (

/) -

/home -

/tmp -

/var -

/var/crash -

/var/log -

/var/log/auditDo not create a separate partition for

/usr. Doing so will cause the installation to fail./usrmust be on a logical volume that is able to change versions along with oVirt Node, and therefore should be left on root (/).For information about the required storage sizes for each partition, see Storage Requirements.

-

-

The

/bootdirectory should be defined as a standard partition. -

The

/vardirectory must be on a separate volume or disk. -

Only XFS or Ext4 file systems are supported.

Configuring Manual Partitioning in a Kickstart File

The following example demonstrates how to configure manual partitioning in a Kickstart file.

clearpart --all

part /boot --fstype xfs --size=1000 --ondisk=sda

part pv.01 --size=42000 --grow

volgroup HostVG pv.01 --reserved-percent=20

logvol swap --vgname=HostVG --name=swap --fstype=swap --recommended

logvol none --vgname=HostVG --name=HostPool --thinpool --size=40000 --grow

logvol / --vgname=HostVG --name=root --thin --fstype=ext4 --poolname=HostPool --fsoptions="defaults,discard" --size=6000 --grow

logvol /var --vgname=HostVG --name=var --thin --fstype=ext4 --poolname=HostPool

--fsoptions="defaults,discard" --size=15000

logvol /var/crash --vgname=HostVG --name=var_crash --thin --fstype=ext4 --poolname=HostPool --fsoptions="defaults,discard" --size=10000

logvol /var/log --vgname=HostVG --name=var_log --thin --fstype=ext4 --poolname=HostPool --fsoptions="defaults,discard" --size=8000

logvol /var/log/audit --vgname=HostVG --name=var_audit --thin --fstype=ext4 --poolname=HostPool --fsoptions="defaults,discard" --size=2000

logvol /home --vgname=HostVG --name=home --thin --fstype=ext4 --poolname=HostPool --fsoptions="defaults,discard" --size=1000

logvol /tmp --vgname=HostVG --name=tmp --thin --fstype=ext4 --poolname=HostPool --fsoptions="defaults,discard" --size=1000|

If you use |

Installing a DUD driver on a host without installer support

There are times when installing oVirt Node (oVirt Node) requires a Driver Update Disk (DUD), such as when using a hardware RAID device that is not supported by the default configuration of oVirt Node. In contrast with Enterprise Linux hosts, oVirt Node does not fully support using a DUD. Subsequently the host fails to boot normally after installation because it does not see RAID. Instead it boots into emergency mode.

Example output:

Warning: /dev/test/rhvh-4.4-20210202.0+1 does not exist Warning: /dev/test/swap does not exist Entering emergency mode. Exit the shell to continue.

In such a case you can manually add the drivers before finishing the installation.

-

A machine onto which you are installing oVirt Node.

-

A DUD.

-

If you are using a USB drive for the DUD and oVirt Node, you must have at least two available USB ports.

-

Load the DUD on the host machine.

You can search for DUDs or modules for CentOS Stream at the following locations:

-

Install oVirt Node. See Installing oVirt Nodes in Installing oVirt as a self-hosted engine using the command line.

When installation completes, do not reboot the system.

If you want to access the DUD using SSH, do the following:

-

Add the string

inst.sshdto the kernel command line:<kernel_command_line> inst.sshd

-

Enable networking during the installation.

-

-

Enter the console mode, by pressing Ctrl + Alt + F3. Alternatively you can connect to it using SSH.

-

Mount the DUD:

# mkdir /mnt/dud # mount -r /dev/<dud_device> /mnt/dud

-

Copy the RPM file inside the DUD to the target machine’s disk:

# cp /mnt/dud/rpms/<path>/<rpm_file>.rpm /mnt/sysroot/root/

For example:

# cp /mnt/dud/rpms/x86_64/kmod-3w-9xxx-2.26.02.014-5.el8_3.elrepo.x86_64.rpm /mnt/sysroot/root/

-

Change the root directory to

/mnt/sysroot:# chroot /mnt/sysroot

-

Back up the current initrd images. For example:

# cp -p /boot/initramfs-4.18.0-240.15.1.el8_3.x86_64.img /boot/initramfs-4.18.0-240.15.1.el8_3.x86_64.img.bck1 # cp -p /boot/ovirt-node-ng-4.4.5.1-0.20210323.0+1/initramfs-4.18.0-240.15.1.el8_3.x86_64.img /boot/ovirt-node-ng-4.4.5.1-0.20210323.0+1/initramfs-4.18.0-240.15.1.el8_3.x86_64.img.bck1

-

Install the RPM file for the driver from the copy you made earlier.

For example:

# dnf install /root/kmod-3w-9xxx-2.26.02.014-5.el8_3.elrepo.x86_64.rpm

This package is not visible on the system after you reboot into the installed environment, so if you need it, for example, to rebuild the

initramfs, you need to install that package once again, after which the package remains.If you update the host using

dnf, the driver update persists, so you do not need to repeat this process.If you do not have an internet connection, use the

rpmcommand instead ofdnf:# rpm -ivh /root/kmod-3w-9xxx-2.26.02.014-5.el8_3.elrepo.x86_64.rpm

-

Create a new image, forcefully adding the driver:

# dracut --force --add-drivers <module_name> --kver <kernel_version>

For example:

# dracut --force --add-drivers 3w-9xxx --kver 4.18.0-240.15.1.el8_3.x86_64

-

Check the results. The new image should be larger, and include the driver. For example, compare the sizes of the original, backed-up image file and the new image file.

In this example, the new image file is 88739013 bytes, larger than the original 88717417 bytes:

# ls -ltr /boot/initramfs-4.18.0-240.15.1.el8_3.x86_64.img* -rw-------. 1 root root 88717417 Jun 2 14:29 /boot/initramfs-4.18.0-240.15.1.el8_3.x86_64.img.bck1 -rw-------. 1 root root 88739013 Jun 2 17:47 /boot/initramfs-4.18.0-240.15.1.el8_3.x86_64.img

The new drivers should be part of the image file. For example, the 3w-9xxx module should be included:

# lsinitrd /boot/initramfs-4.18.0-240.15.1.el8_3.x86_64.img | grep 3w-9xxx drwxr-xr-x 2 root root 0 Feb 22 15:57 usr/lib/modules/4.18.0-240.15.1.el8_3.x86_64/weak-updates/3w-9xxx lrwxrwxrwx 1 root root 55 Feb 22 15:57 usr/lib/modules/4.18.0-240.15.1.el8_3.x86_64/weak-updates/3w-9xxx/3w-9xxx.ko-../../../4.18.0-240.el8.x86_64/extra/3w-9xxx/3w-9xxx.ko drwxr-xr-x 2 root root 0 Feb 22 15:57 usr/lib/modules/4.18.0-240.el8.x86_64/extra/3w-9xxx -rw-r--r-- 1 root root 80121 Nov 10 2020 usr/lib/modules/4.18.0-240.el8.x86_64/extra/3w-9xxx/3w-9xxx.ko

-

Copy the image to the the directory under

/bootthat contains the kernel to be used in the layer being installed, for example:# cp -p /boot/initramfs-4.18.0-240.15.1.el8_3.x86_64.img /boot/ovirt-node-ng-4.4.5.1-0.20210323.0+1/initramfs-4.18.0-240.15.1.el8_3.x86_64.img

-

Exit chroot.

-

Exit the shell.

-

If you used Ctrl + Alt + F3 to access a virtual terminal, then move back to the installer by pressing Ctrl + Alt + F_<n>_, usually F1 or F5

-

At the installer screen, reboot.

The machine should reboot successfully.

Automating oVirt Node deployment

You can install oVirt Node (oVirt Node) without a physical media device by booting from a PXE server over the network with a Kickstart file that contains the answers to the installation questions.

|

When installing or reinstalling the host’s operating system, oVirt strongly recommends that you first detach any existing non-OS storage that is attached to the host to avoid accidental initialization of these disks, and with that, potential data loss. |

General instructions for installing from a PXE server with a Kickstart file are available in the Enterprise Linux Installation Guide, as oVirt Node is installed in much the same way as Enterprise Linux. oVirt Node-specific instructions, with examples for deploying oVirt Node with Red Hat Satellite, are described below.

The automated oVirt Node deployment has 3 stages:

Preparing the installation environment

-

Visit the oVirt Node Download page.

-

Choose the version of oVirt Node to download and click its Installation ISO link.

-

Make the oVirt Node ISO image available over the network. See Installation Source on a Network in the Enterprise Linux Installation Guide.

-

Extract the squashfs.img hypervisor image file from the oVirt Node ISO:

# mount -o loop /path/to/oVirt Node-ISO /mnt/rhvh # cp /mnt/rhvh/Packages/redhat-virtualization-host-image-update* /tmp # cd /tmp # rpm2cpio redhat-virtualization-host-image-update* | cpio -idmvThis squashfs.img file, located in the

/tmp/usr/share/redhat-virtualization-host/image/directory, is called redhat-virtualization-host-version_number_version.squashfs.img. It contains the hypervisor image for installation on the physical machine. It should not be confused with the /LiveOS/squashfs.img file, which is used by the Anacondainst.stage2option.

Configuring the PXE server and the boot loader

-

Configure the PXE server. See Preparing for a Network Installation in the Enterprise Linux Installation Guide.

-

Copy the oVirt Node boot images to the

/tftpbootdirectory:# cp mnt/rhvh/images/pxeboot/{vmlinuz,initrd.img} /var/lib/tftpboot/pxelinux/ -

Create a

rhvhlabel specifying the oVirt Node boot images in the boot loader configuration:LABEL rhvh MENU LABEL Install oVirt Node KERNEL /var/lib/tftpboot/pxelinux/vmlinuz APPEND initrd=/var/lib/tftpboot/pxelinux/initrd.img inst.stage2=URL/to/oVirt Node-ISOoVirt Node Boot loader configuration example for Red Hat SatelliteIf you are using information from Red Hat Satellite to provision the host, you must create a global or host group level parameter called

rhvh_imageand populate it with the directory URL where the ISO is mounted or extracted:<%# kind: PXELinux name: oVirt Node PXELinux %> # Created for booting new hosts # DEFAULT rhvh LABEL rhvh KERNEL <%= @kernel %> APPEND initrd=<%= @initrd %> inst.ks=<%= foreman_url("provision") %> inst.stage2=<%= @host.params["rhvh_image"] %> intel_iommu=on console=tty0 console=ttyS1,115200n8 ssh_pwauth=1 local_boot_trigger=<%= foreman_url("built") %> IPAPPEND 2 -

Make the content of the oVirt Node ISO locally available and export it to the network, for example, using an HTTPD server:

# cp -a /mnt/rhvh/ /var/www/html/rhvh-install # curl URL/to/oVirt Node-ISO/rhvh-install

Creating and running a Kickstart file

-

Create a Kickstart file and make it available over the network. See Kickstart Installations in the Enterprise Linux Installation Guide.

-

Ensure that the Kickstart file meets the following oVirt-specific requirements:

-

The

%packagessection is not required for oVirt Node. Instead, use theliveimgoption and specify the redhat-virtualization-host-version_number_version.squashfs.img file from the oVirt Node ISO image:liveimg --url=example.com/tmp/usr/share/redhat-virtualization-host/image/redhat-virtualization-host-version_number_version.squashfs.img -

Autopartitioning is highly recommended, but use caution: ensure that the local disk is detected first, include the

ignorediskcommand, and specify the local disk to ignore, such assda. To ensure that a particular drive is used, oVirt recommends usingignoredisk --only-use=/dev/disk/<path>orignoredisk --only-use=/dev/disk/<ID>:autopart --type=thinp ignoredisk --only-use=sda ignoredisk --only-use=/dev/disk/<path> ignoredisk --only-use=/dev/disk/<ID>Autopartitioning requires thin provisioning.

The

--no-homeoption does not work in oVirt Node because/homeis a required directory.If your installation requires manual partitioning, see Custom Partitioning for a list of limitations that apply to partitions and an example of manual partitioning in a Kickstart file.

-

A

%postsection that calls thenodectl initcommand is required:%post nodectl init %endEnsure that the

nodectl initcommand is at the very end of the%postsection but before the reboot code, if any.Kickstart example for deploying oVirt Node on its ownThis Kickstart example shows you how to deploy oVirt Node. You can include additional commands and options as required.

This example assumes that all disks are empty and can be initialized. If you have attached disks with data, either remove them or add them to the

ignoredisksproperty.liveimg --url=http://FQDN/tmp/usr/share/redhat-virtualization-host/image/redhat-virtualization-host-version_number_version.squashfs.img clearpart --all autopart --type=thinp rootpw --plaintext ovirt timezone --utc America/Phoenix zerombr text reboot %post --erroronfail nodectl init %end

-

-

Add the Kickstart file location to the boot loader configuration file on the PXE server:

APPEND initrd=/var/tftpboot/pxelinux/initrd.img inst.stage2=URL/to/oVirt Node-ISO inst.ks=URL/to/oVirt Node-ks.cfg -

Install oVirt Node following the instructions in Booting from the Network Using PXE in the Enterprise Linux Installation Guide.

4.2. Enterprise Linux hosts

4.2.1. Installing Enterprise Linux hosts

A Enterprise Linux host is based on a standard basic installation of Enterprise Linux 9 or later on a physical server, with the Enterprise Linux Server and oVirt repositories enabled.

The oVirt project also provides packages for Enterprise Linux 9.

For detailed installation instructions, see the Performing a standard EL installation.

The host must meet the minimum host requirements.

|

When installing or reinstalling the host’s operating system, oVirt strongly recommends that you first detach any existing non-OS storage that is attached to the host to avoid accidental initialization of these disks, and with that, potential data loss. |

|

Virtualization must be enabled in your host’s BIOS settings. For information on changing your host’s BIOS settings, refer to your host’s hardware documentation. |

|

Do not install third-party watchdogs on Enterprise Linux hosts. They can interfere with the watchdog daemon provided by VDSM. |

4.2.2. Installing Cockpit on Enterprise Linux hosts

You can install Cockpit for monitoring the host’s resources and performing administrative tasks.

-

Install the dashboard packages:

# dnf install cockpit-ovirt-dashboard -

Enable and start the

cockpit.socketservice:# systemctl enable cockpit.socket # systemctl start cockpit.socket -

Check if Cockpit is an active service in the firewall:

# firewall-cmd --list-servicesYou should see

cockpitlisted. If it is not, enter the following with root permissions to addcockpitas a service to your firewall:# firewall-cmd --permanent --add-service=cockpitThe

--permanentoption keeps thecockpitservice active after rebooting.

You can log in to the Cockpit web interface at https://HostFQDNorIP:9090.

4.3. Recommended Practices for Configuring Host Networks

| Always use the oVirt Engine to modify the network configuration of hosts in your clusters. Otherwise, you might create an unsupported configuration. For details, see Network Manager Stateful Configuration (nmstate). |

If your network environment is complex, you may need to configure a host network manually before adding the host to the oVirt Engine.

Consider the following practices for configuring a host network:

-

Configure the network with Cockpit. Alternatively, you can use

nmtuiornmcli. -

If a network is not required for a self-hosted engine deployment or for adding a host to the Engine, configure the network in the Administration Portal after adding the host to the Engine. See Creating a New Logical Network in a Data Center or Cluster.

-

Use the following naming conventions:

-

VLAN devices:

VLAN_NAME_TYPE_RAW_PLUS_VID_NO_PAD -

VLAN interfaces:

physical_device.VLAN_ID(for example,eth0.23,eth1.128,enp3s0.50) -

Bond interfaces:

bondnumber(for example,bond0,bond1) -

VLANs on bond interfaces:

bondnumber.VLAN_ID(for example,bond0.50,bond1.128)

-

-

Use network bonding. Network teaming is not supported in oVirt and will cause errors if the host is used to deploy a self-hosted engine or added to the Engine.

-

Use recommended bonding modes:

-

If the

ovirtmgmtnetwork is not used by virtual machines, the network may use any supported bonding mode. -

If the

ovirtmgmtnetwork is used by virtual machines, see Which bonding modes work when used with a bridge that virtual machine guests or containers connect to?. -

oVirt’s default bonding mode is

(Mode 4) Dynamic Link Aggregation. If your switch does not support Link Aggregation Control Protocol (LACP), use(Mode 1) Active-Backup. See Bonding Modes for details.

-

-

Configure a VLAN on a physical NIC as in the following example (although

nmcliis used, you can use any tool):# nmcli connection add type vlan con-name vlan50 ifname eth0.50 dev eth0 id 50 # nmcli con mod vlan50 +ipv4.dns 8.8.8.8 +ipv4.addresses 123.123.0.1/24 +ipv4.gateway 123.123.0.254 -

Configure a VLAN on a bond as in the following example (although

nmcliis used, you can use any tool):# nmcli connection add type bond con-name bond0 ifname bond0 bond.options "mode=active-backup,miimon=100" ipv4.method disabled ipv6.method ignore # nmcli connection add type ethernet con-name eth0 ifname eth0 master bond0 slave-type bond # nmcli connection add type ethernet con-name eth1 ifname eth1 master bond0 slave-type bond # nmcli connection add type vlan con-name vlan50 ifname bond0.50 dev bond0 id 50 # nmcli con mod vlan50 +ipv4.dns 8.8.8.8 +ipv4.addresses 123.123.0.1/24 +ipv4.gateway 123.123.0.254 -

Do not disable

firewalld. -

Customize the firewall rules in the Administration Portal after adding the host to the Engine. See Configuring Host Firewall Rules.

4.4. Adding Standard Hosts to the oVirt Engine

| Always use the oVirt Engine to modify the network configuration of hosts in your clusters. Otherwise, you might create an unsupported configuration. For details, see Network Manager Stateful Configuration (nmstate). |

Adding a host to your oVirt environment can take some time, as the following steps are completed by the platform: virtualization checks, installation of packages, and creation of a bridge.

-

From the Administration Portal, click .

-

Click New.

-

Use the drop-down list to select the Data Center and Host Cluster for the new host.

-

Enter the Name and the Address of the new host. The standard SSH port, port 22, is auto-filled in the SSH Port field.

-

Select an authentication method to use for the Engine to access the host.

-

Enter the root user’s password to use password authentication.

-

Alternatively, copy the key displayed in the SSH PublicKey field to /root/.ssh/authorized_keys on the host to use public key authentication.

-

-

Optionally, click the Advanced Parameters button to change the following advanced host settings:

-

Disable automatic firewall configuration.

-

Add a host SSH fingerprint to increase security. You can add it manually, or fetch it automatically.

-

-

Optionally configure power management, where the host has a supported power management card. For information on power management configuration, see Host Power Management Settings Explained in the Administration Guide.

-

Click OK.